Policing the platforms: Does social media need independent guidelines?

24 February 2021

Jon Guinness & Sumant Wahi Portfolio Managers, Fidelity Future Connectivity Fund

Questions of digital ethics

The internet has transformed the global economy. But the arrival of social media giants such as Facebook and Twitter has raised new ethical questions. These debates directly affect the sustainability and long-term returns of companies in our investment universe. We are particularly interested in digital ethics around:

- Misinformation: the commitment to truthful and honest debate on social media platforms

- Online fraud: protecting users from online criminality

- Privacy: safeguarding users’ privacy and control over their data

- Online welfare: combating harmful content (e.g. racism, sexual discrimination, criminal incitement etc.) and promoting user welfare in the broadest sense

A duty to safeguard free speech

The bitter nature of the US election and the banning of Donald Trump from social media platforms after protesters stormed the Capitol has led to a heated debate over the ethics of free speech and who controls what is published.

The global reach of Facebook and Twitter makes them attractive investments. But we believe their dominance in internet publishing means they have an inherent duty to ensure impartiality, truthfulness and the safeguarding of free speech. If social media companies fail to do this, they risk reputational damage and punitive regulation from politicians seeking to counter perceived biases. They may even be turned into utility-like entities. This would preserve their monopolies, but at the cost of heavy regulation that could hamper their ability to innovate and grow.

However, internet businesses cannot be expected to wrestle with philosophical issues around free speech on their own. It is for society as a whole to decide what is and is not acceptable. Social media companies can take steps to address the issue to some extent, but governments and independent regulators must play a central role in shaping good guidelines, focused on promoting open debate without it veering into misinformation and abuse.

Drawing the line between free speech and incitement

Social media firms in the US currently benefit from being able to claim indemnity from prosecution for the opinions expressed on their platforms, under Section 230 of the US Communications Decency Act. At the same time, they reserve the right to ban whomever they wish for any reason. They can also suppress important press stories if they so choose. This gives social media companies immense power that they wield unevenly.

In 2017, for example, web infrastructure company Cloudflare removed a neo-Nazi website from the internet after a violent far-right rally in Charlottesville, Virginia. There were strong justifications for such a move. However, Cloudflare executives had previously defended hosting a number of unsavoury forums on the grounds of free speech. Following the Charlottesville incident, the CEO commented, “Literally, I woke up in a bad mood and decided someone shouldn’t be allowed on the Internet.” He may have been flippant, but this statement and other actions from social media companies show the lack of clearly defined frameworks around moderating online speech.

Facebook has been vocal about the need for such frameworks. CEO Mark Zuckerberg recently commented: “It would be very helpful to us and the internet sector overall for there to be clear rules and expectations on some of these social issues around how content should be handled, around how elections should be handled, around what privacy norms governments want to see in place, because these questions all have trade-offs.”

Repeal of indemnity law and antitrust moves create risks for platforms

The absence of clear laws for social media platforms to follow is a pressing issue, because a repeal of Section 230 looks possible. Both Democrats and Republicans have made the case for it in recent months. Aside from significantly increasing the costs of monitoring content for social media firms, overturning Section 230 could push these companies to adopt ultra-conservative approaches to supervising content, severely restricting the dissemination of information and online debate.

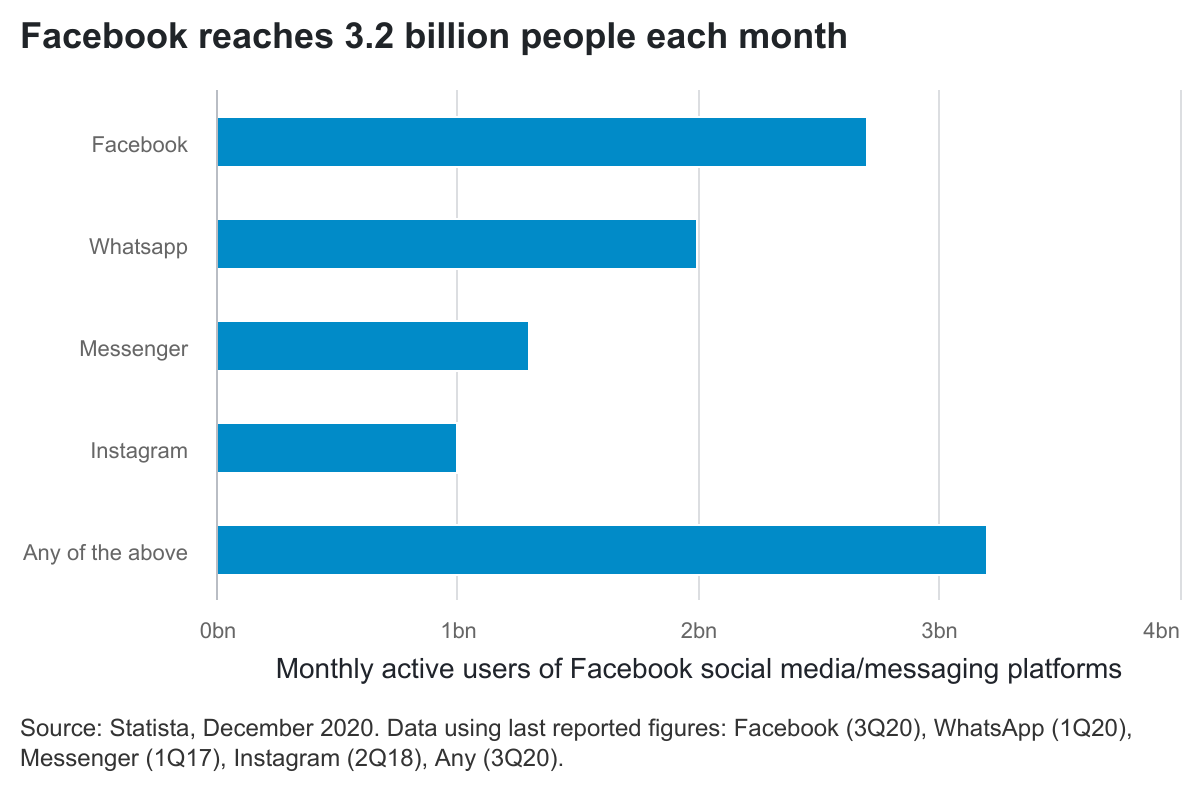

Regulation on antitrust grounds is another potential risk. Facebook and its network reach nearly half the world’s population each month, three times more than equivalent platforms such as WeChat in China. As a result, fair competition in the tech sector has become an increasingly prominent issue over the past 12 months.

The US Federal Trade Commission and attorneys general from 46 states launched antitrust proceedings against Facebook in December 2020 over its acquisitions of Instagram and Snapchat. In Australia, the government is attempting to legislate on a ‘New Bargaining Code’ for internet firms, which would force the likes of Facebook and Google to negotiate payments to third-party media companies for the content they use. European regulators are preparing new laws that will make it easier to launch investigations, curb growth into new product areas and bar tech firms from giving their own products preferential treatment in their digital stores.

Social media companies need to act now

Given such high levels of interest in potential curbs on platforms, we believe that social media companies must take a lead on policing their networks and not wait for government regulation. This may generate additional costs, but it will be far less than the costs of inaction. The Trump ban is unlikely to have caused any negative financial impact as many brands shied away from his polarising tone, but the intense pressure put on platforms to make these decisions has implications for the future. Companies such as Facebook already recognise the urgent need for a new approach and are taking steps to reduce political polarisation and improve the quality of content oversight and review.

Independent oversight boards can form part of the solution, at least until more permanent frameworks are developed. These boards could be comprised of lawyers, academics, journalists and political experts, and function in a quasi-judicial role to review cases, monitor content and contribute to policies. Social media companies can set up these panels themselves, as Facebook has. However, it’s important that they are seen as independent and balanced in order to have credibility with the public and to be considered as a legitimate alternative to tough regulation. Unusual cases, such as that of Trump, will only proliferate over time as social media wields ever more influence over our lives.

Important information

This information is for investment professionals only and should not be relied upon by private investors. The value of investments (and the income from them) can go down as well as up and you may not get back the amount invested. Past performance is not a reliable indicator of future returns. Investors should note that the views expressed may no longer be current and may have already been acted upon. Investments in small or emerging markets may be more volatile than other more developed markets. Changes in currency exchange rates may affect the value of investments in overseas markets. The FF Future Connectivity Fund can use financial derivative instruments for investment purposes, which may expose the fund to a higher degree of risk and can cause investments to experience larger than average price fluctuations. Reference in this document to specific securities should not be interpreted as a recommendation to buy or sell these securities and is only included for illustration purposes. Issued by Financial Administration Services Limited and FIL Pensions Management, authorised and regulated by the Financial Conduct Authority. Fidelity, Fidelity International. UKM0221/33535/SSO/NA